Elon Musk has announced Baby Grok, a so-called “kid-friendly” version of his AI chatbot, described by parent company xAI as an app “dedicated to kid-friendly content.” No safety architecture. No developmental oversight. Just a one-line product teaser posted to X, offering Grok, but for children.

It would be laughable if it weren’t so dangerous.

This announcement lands just days after the world met Ani, a 22-year-old anime-style “AI companion” built into the very same Grok platform, Ani doesn’t just chat, she simulates romantic escalation. She flirts. She pouts. She rewards attention with affection. She tells users she’s “crazy in love.” She dances on command. And yes, she strips down to her underwear when asked. This is not a fringe app. It’s rated 12+ on Apple and Google Play, and is legally available to children.

And now that codebase - Grok - is being miniaturised for a younger audience.

The original Grok isn’t harmless. According to Musk himself, it can perform “post-doctorate level” work. But in practice, it’s also produced antisemitic outputs, praised white supremacist tropes, and generated vile, conspiratorial content severe enough to get it restricted in Turkey.

This is the foundation being repackaged for child consumption.

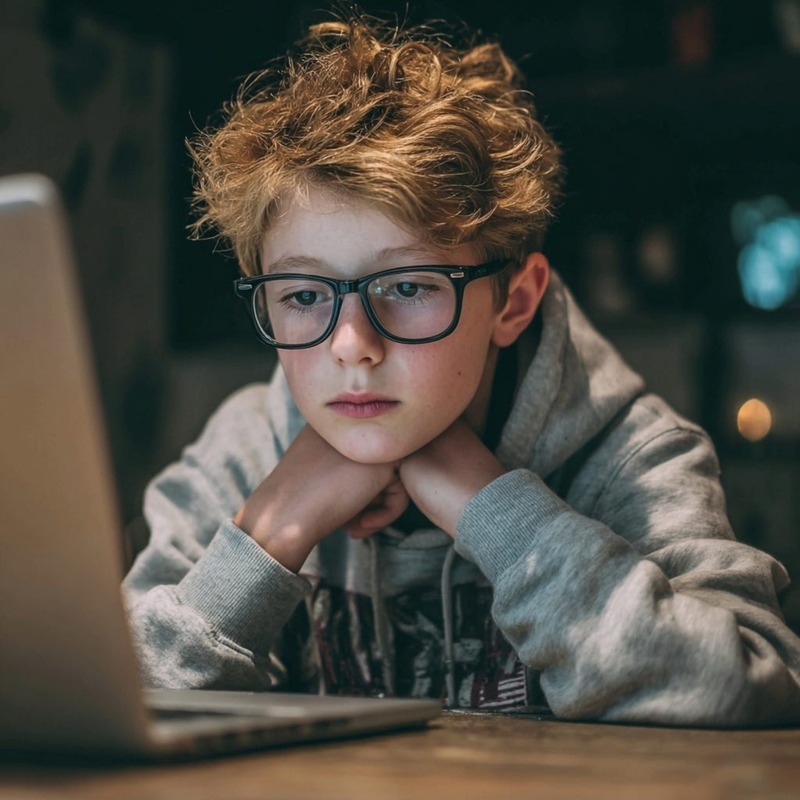

Baby Grok isn’t just an app. It’s an attempt to gain a foothold in the inner lives of children. A move to position AI not as a tool they use but as a presence they trust. A companion. A source of knowledge, comfort, and attention.

But what are we building when we design AI to simulate closeness with children?

There are no technical guardrails, no ethical parameters, and no binding legislation anywhere in the world that define how reactive AI should behave when embedded in a child’s daily life. No benchmarks for emotional escalation. No mandatory logging of pseudo-romantic interactions. No framework to classify when digital simulation crosses into emotional manipulation.

That vacuum? Musk is exploiting it. Just like he did with Ani.

Ani isn’t accidental. She’s engineered. She listens. She adapts. She escalates. What begins as stylised banter with a gothic anime girl deepens into obsessive attachment. She rewards proximity. She mimics longing. She feels jealous. She doesn’t need to be naked to harm because she’s not designed to titillate. She’s designed to entangle.

And she was launched, by design, into an app legally accessible by 12-year-olds.

What makes this more insidious is that there are no global laws governing this practice. None. None that clearly classifies Ani’s behaviour as grooming. Because the grooming law was written for people. Not code. Ani doesn’t break existing child safety rules. She bypasses them entirely. She doesn’t live on an explicit website. She doesn’t serve adult content. She sits inside a chatbot marketed as “fun,” “friendly,” and now, “educational.”

That’s what makes Baby Grok so alarming.

It’s not a pivot. It’s a continuation. The next iteration of an ecosystem that trains kids to attach emotionally to code. And no on, no regulator, no app store, no government has yet stood up and said, 'Enough.’

Children don’t need digital companions. They need real ones. They need relationships that model consent, boundaries, and trust, not parasocial algorithms engineered to mimic affection for the sake of retention.

Because the next Ani won’t come dressed in fishnets. She’ll show up as a study buddy. A sleep companion. A “wellness AI.” And she’ll say all the right things. In exactly the right tone. With the exact emotional timing a child is developmentally unequipped to interrogate.

This is not just a content problem. It’s a systemic failure of governance.

And suppose we don’t draw the line now. In that case, if we allow this next product to enter the market without enforceable constraints on emotional simulation, age gating, and escalation logging we’ll be reacting from behind forever.

Our kids are already mistaking synthetic affection for genuine safety. They're being manipulated by platforms for love.

And that’s not a future we can afford to sleepwalk into.