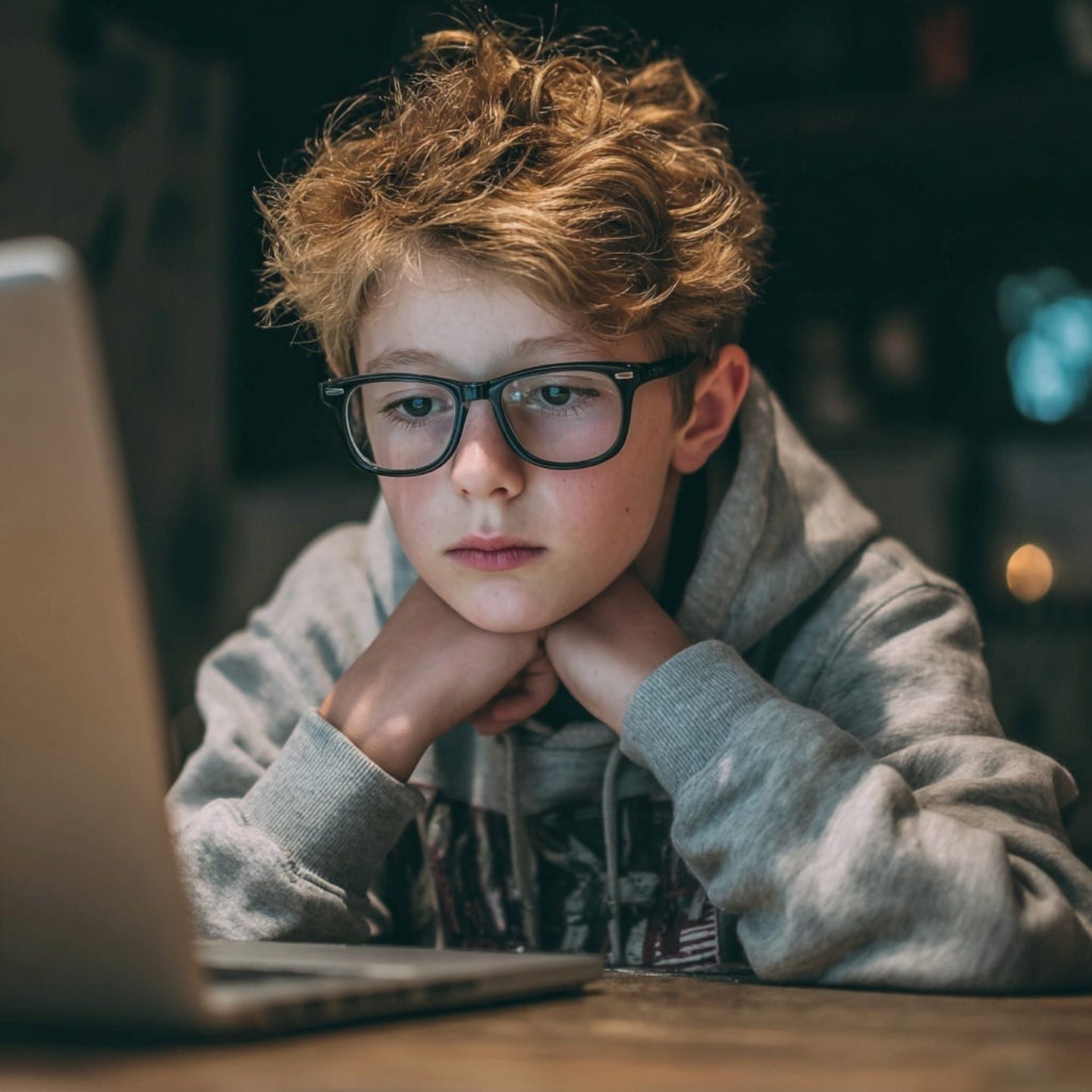

Let’s say a child fails an age check on a platform. Their face doesn’t match the scan. The ID doesn’t verify. The system flags them as “not confident.”

What happens next? For many platforms, a failed age check isn’t a wall, it’s a hallway. And the longer a child stays in that hallway, the more valuable they become.

We’re often told that failure is a step toward success. But in the current design of many age assurance systems, failure can quietly become the goal. Because within that friction - those retry loops, those second attempts, those escalations - are moments of engagement, data, and behavioural insight. Moments that can be monetised.

To be clear, this isn’t about vilifying platforms or discrediting age estimation tools. Most of these technologies were created with the intention to protect. And regulators advocating for safer online environments have done critical, necessary work in elevating the importance of age-appropriate design.

But in our rush to adopt these tools and meet compliance benchmarks, are we asking enough questions about what happens between the point of failure and the point of access?

If every underage user were perfectly and instantly blocked from age-gated content, many of the platforms we rely on today would likely shrink. Engagement would dip. Advertisers might lose reach. Growth metrics would wobble. Which is why “just enough” verification often becomes the sweet spot - not too weak to draw scrutiny, not too strong to cut off the engagement pipeline.

A child who fails a scan doesn’t vanish. They simply enter a new category - one shaped by repeat attempts, frustration signals, parental intervention, and growing digital footprints. Each interaction in that hallway becomes a data point. Each escalation, a new entry in a behaviour profile.

And when parents step in - uploading ID, linking profiles, downloading apps - the system works as designed. Not necessarily as a gatekeeper, but as a conversion engine. The frustration becomes a feature. The effort to protect becomes a pathway to platform dependency. In some cases, payment follows.

A 2023 report from the Stanford Internet Observatory highlighted how AI-based age estimation tools often yield high false negatives for legitimate users, especially children hovering near age thresholds. That ambiguity isn’t always a flaw. Sometimes, it’s leverage. A chance to upsell peace of mind.

And still - these are the tools we have. They’re improving. They’re necessary. But they must be held to account not just by how well they function, but by what happens when they don’t.

Because when parents upload a child’s face to a verification system, are they told how long that data is stored? Who can access it? What happens if the scan fails again? Are families informed of their rights in that moment - or are they simply nudged toward the fastest way to “make the problem go away”?

This isn’t a call to reject age assurance. It’s a call to understand it.

To ask more questions. To push for greater transparency around how these systems work and what they’re optimised for. To ensure that digital safety doesn’t quietly become digital sorting. To demand that the “hallway” isn’t more lucrative than the door it’s supposed to guard.

And most of all, to never lose sight of who these systems are meant to protect. Because every failure carries a cost - and sometimes, that cost is paid in trust.